As AI instruments grow to be more and more subtle and accessible, so too has certainly one of their worst functions: non-consensual deepfake pornography. Whereas a lot of this content material is hosted on devoted websites, an increasing number of it’s discovering its means onto social platforms. As we speak, the Meta Oversight Board introduced that it was taking over instances that would drive the corporate to reckon with the way it offers with deepfake porn.

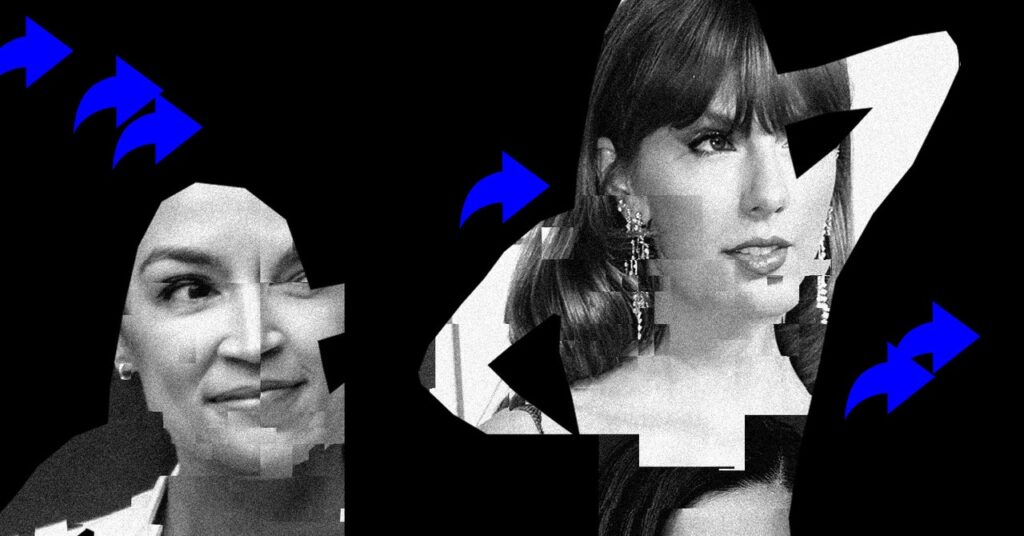

The board, which is an impartial physique that may concern each binding selections and proposals to Meta, will give attention to two deepfake porn instances, each concerning celebrities who had their photos altered to create specific content material. In a single case about an unnamed American superstar, deepfake porn depicting the superstar was faraway from Fb after it had already been flagged elsewhere on the platform. The publish was additionally added to Meta’s Media Matching Service Financial institution, an automatic system that finds and removes photos which have already been flagged as violating Meta’s insurance policies, to maintain it off the platform.

Within the different case, a deepfake picture of an unnamed Indian superstar remained up on Instagram, even after customers reported it for violating Meta’s insurance policies on pornography. The deepfake of the Indian superstar was eliminated as soon as the board took up the case, in line with the announcement.

In each instances, the photographs have been eliminated for violating Meta’s insurance policies on bullying and harassment, and didn’t fall underneath Meta’s insurance policies on porn. Meta, nevertheless, prohibits “content material that depicts, threatens or promotes sexual violence, sexual assault or sexual exploitation” and doesn’t enable porn or sexually specific adverts on its platforms. In a weblog publish launched in tandem with the announcement of the instances, Meta mentioned it eliminated the posts for violating the “derogatory sexualized photoshops or drawings” portion of its bullying and harassment coverage, and that it additionally “decided that it violated [Meta’s] grownup nudity and sexual exercise coverage.”

The board hopes to make use of these instances to look at Meta’s insurance policies and methods to detect and take away nonconsensual deepfake pornography, in line with Julie Owono, an Oversight Board member. “I can tentatively already say that the principle downside might be detection,” she says. “Detection just isn’t as excellent or at the very least just isn’t as environment friendly as we would want.”

Meta has additionally lengthy confronted criticism for its method to moderating content material exterior the US and Western Europe. For this case, the board already voiced considerations that the American superstar and Indian superstar acquired totally different remedy in response to their deepfakes showing on the platform.

“We all know that Meta is faster and more practical at moderating content material in some markets and languages than others. By taking one case from america and one from India, we wish to see if Meta is defending all girls globally in a good means,” says Oversight Board cochair Helle Thorning-Schmidt. “It’s important that this matter is addressed, and the board seems to be ahead to exploring whether or not Meta’s insurance policies and enforcement practices are efficient at addressing this downside.”