Getty Pictures

Getty PicturesInstagram is overhauling the way in which it really works for youngsters, promising extra “built-in protections” for younger folks and added controls and reassurance for folks.

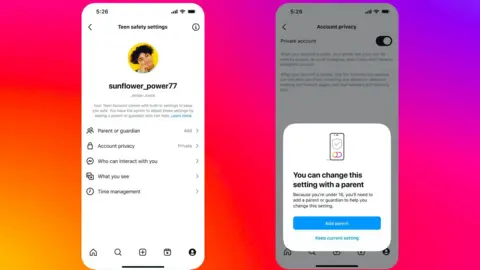

The brand new “teen accounts”, for kids aged 13 to fifteen, will power many privateness settings to be on by default, quite than a baby opting in.

Youngsters’ posts may also be set to non-public – making them unviewable to individuals who do not observe them, and so they should approve all new followers.

These settings can solely modified by giving a father or mother or guardian oversight of the account, or when the kid turns 16.

Social media corporations are below strain worldwide to make their platforms safer, with issues that not sufficient is being achieved to defend younger folks from dangerous content material.

The NSPCC referred to as the announcement a “step in the precise path” however stated Instagram’s proprietor, Meta, appeared to “placing the emphasis on youngsters and oldsters needing to maintain themselves protected.”

Rani Govender, the NSPCC’s on-line youngster security coverage supervisor, stated Meta and different social media corporations wanted to take extra motion themselves.

“This should be backed up by proactive measures that stop dangerous content material and sexual abuse from proliferating Instagram within the first place, so all youngsters take pleasure in complete protections on the merchandise they use,” she stated.

Meta describes the adjustments as a “new expertise for teenagers, guided by dad and mom”, and says they may “higher help dad and mom, and provides them peace of thoughts that their teenagers are protected with the precise protections in place.”

Nevertheless, media regulator Ofcom raised issues in April over dad and mom’ willingness to intervene to maintain their youngsters protected on-line.

In a chat final week, senior Meta government Sir Nick Clegg stated: “One of many issues we do discover… is that even after we construct these controls, dad and mom don’t use them.”

Ian Russell, whose daughter Molly considered content material about self-harm and suicide on Instagram earlier than taking her life aged 14, advised the BBC it was necessary to attend and see how the brand new coverage was carried out.

“Whether or not it really works or not we’ll solely discover out when the measures come into place,” he stated.

“Meta is superb at drumming up PR and making these huge bulletins, however what additionally they should be good at is being clear and sharing how properly their measures are working.”

How will it work?

Teen accounts will largely change the way in which Instagram works for customers between the ages of 13 and 15, with plenty of settings turned on by default.

These embody strict controls on delicate content material to forestall suggestions of probably dangerous materials, and muted notifications in a single day.

Accounts may also be set to non-public quite than public – which means youngsters must actively settle for new followers and their content material can’t be considered by individuals who do not observe them.

Instagram

InstagramDad and mom who select to oversee their kid’s account will be capable to see who they message and the matters they’ve stated they’re keen on – although they will be unable to view the content material of messages.

Instagram says it should start transferring thousands and thousands of current teen customers into the brand new expertise inside 60 days of notifying them of the adjustments.

Age identification

The system will primarily depend on customers being sincere about their ages – although Instagram already has instruments that search to confirm a person’s age if there are suspicions they don’t seem to be telling the reality.

From January, within the US, it should additionally begin utilizing synthetic intelligence (AI) instruments to try to proactively detect teenagers utilizing grownup accounts, to place them again right into a teen account.

The UK’s On-line Security Act, handed earlier this 12 months, requires on-line platforms to take motion to maintain youngsters protected, or face enormous fines.

Ofcom warned social media websites in Might they may very well be named and shamed – and banned for under-18s – in the event that they fail to adjust to new on-line security guidelines.

Social media trade analyst Matt Navarra described the adjustments as vital – however stated they hinged on enforcement.

“As we have seen with teenagers all through historical past, in these kinds of eventualities, they may discover a manner across the blocks, if they’ll,” he advised the BBC.

“So I believe Instagram might want to be sure that safeguards cannot simply be bypassed by extra tech-savvy teenagers.”

Questions for Meta

Instagram is in no way the primary platform to introduce such instruments for folks – and it already claims to have greater than 50 instruments geared toward holding teenagers protected.

It launched a household centre and supervision instruments for folks in 2022 that allowed them to see the accounts their youngster follows and who follows them, amongst different options.

Snapchat additionally launched its circle of relatives centre letting dad and mom over the age of 25 see who their youngster is messaging and restrict their capacity to view sure content material.

In early September YouTube stated it would restrict suggestions of sure well being and health movies to youngsters, similar to these which “idealise” sure physique varieties.

Instagram already makes use of age verification know-how to verify the age of teenagers who attempt to change their age to over 18, by way of a video selfie.

This raises the query of why regardless of the big variety of protections on Instagram, younger individuals are nonetheless uncovered to dangerous content material.

An Ofcom examine earlier this 12 months discovered that each single youngster it spoke to had seen violent materials on-line, with Instagram, WhatsApp and Snapchat being probably the most steadily named companies they discovered it on.

Whereas they’re additionally among the many largest, it’s a transparent indication of an issue that has not but been solved.

Below the On-line Security Act, platforms must present they’re dedicated to eradicating unlawful content material, together with youngster sexual abuse materials (CSAM) or content material that promotes suicide or self-harm.

However the guidelines are usually not anticipated to totally take impact till 2025.

In Australia, Prime Minister Anthony Albanese just lately introduced plans to ban social media for kids by bringing in a brand new age restrict for youths to make use of platforms.

Instagram’s newest instruments put management extra firmly within the arms of oldsters, who will now take much more direct duty for deciding whether or not to permit their youngster extra freedom on Instagram, and supervising their exercise and interactions.

They may in fact additionally have to have their very own Instagram account.

However in the end, dad and mom don’t run Instagram itself and can’t management the algorithms which push content material in the direction of their youngsters, or what’s shared by its billions of customers all over the world.

Social media skilled Paolo Pescatore stated it was an “necessary step in safeguarding youngsters’s entry to the world of social media and pretend information.”

“The smartphone has opened as much as a world of disinformation, inappropriate content material fuelling a change in behaviour amongst youngsters,” he stated.

“Extra must be achieved to enhance youngsters’s digital wellbeing and it begins by giving management again to folks.”