A brand new computing paradigm—thermodynamic computing—has entered the scene. Okay, okay, perhaps it’s simply probabilistic computing by a brand new title. They each use noise (corresponding to that brought on by thermal fluctuations) as a substitute of preventing it, to carry out computations. However nonetheless, it’s a brand new bodily strategy.

“In the event you’re speaking about computing paradigms, no, it’s this identical computing paradigm,” as probabilistic computing, says Behtash Behin-Aein, the CTO and founding father of probabilistic computing startup Ludwig Computing (named after Ludwig Boltzmann, a scientist largely answerable for the sector of, you guessed it, thermodynamics). “Nevertheless it’s a brand new implementation,” he provides.

In a latest publication in Nature Communications, New York-based startup Regular Computing detailed their first prototype of what they name a thermodynamic pc. They’ve demonstrated that they will use it to harness noise to invert matrices. Additionally they demonstrated Gaussian sampling, which underlies some AI purposes.

How Noise Can Support Some Computing Issues

Conventionally, noise is the enemy of computation. Nonetheless, sure purposes truly depend on artificially generated noise. And utilizing naturally occurring noise will be vastly extra environment friendly.

“We’re specializing in algorithms which might be capable of leverage noise, stochasticity, and non-determinism,” says Zachery Belateche, silicon engineering lead at Regular Computing. “That algorithm area seems to be large, all the pieces from scientific computing to AI to linear algebra. However a thermodynamic pc shouldn’t be going to be serving to you verify your e mail anytime quickly.”

For these purposes, a thermodynamic—or probabilistic—pc begins out with its parts in some semi-random state. Then, the issue the person is attempting to unravel is programmed into the interactions between the parts. Over time, these interactions permit the parts to return to equilibrium. This equilibrium is the answer to the computation.

This strategy is a pure match for sure scientific computing purposes that already embrace randomness, corresponding to Monte-Carlo simulations. It’s also nicely fitted to AI picture technology algorithm secure diffusion, and a kind of AI often known as probabilistic AI. Surprisingly, it additionally seems to be well-suited for some linear algebra computations that aren’t inherently probabilistic. This makes the strategy extra broadly relevant to AI coaching.

“Now we see with AI that paradigm of CPUs and GPUs is getting used, but it surely’s getting used as a result of it was there. There was nothing else. Say I discovered a gold mine. I wish to principally dig it. Do I’ve a shovel? Or do I’ve a bulldozer? I’ve a shovel, simply dig,” says Mohammad C. Bozchalui, the CEO and co-founder of Ludwig Computing. “We’re saying this can be a totally different world which requires a special device.”

Regular Computing’s Method

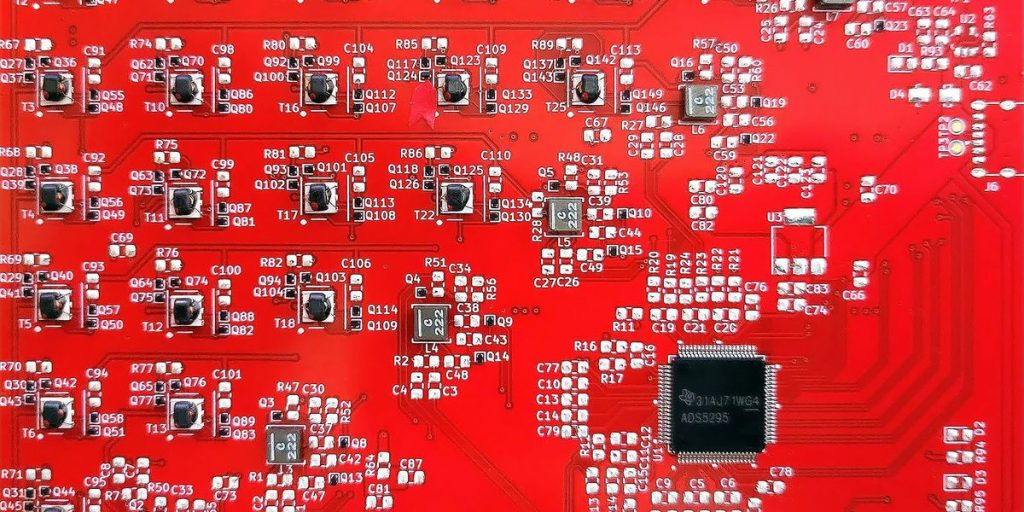

Regular Computing’s prototype chip, which they termed the stochastic processing unit (SPU), consists of eight capacitor-inductor resonators and random noise turbines. Every resonator is linked to one another resonator by way of a tunable coupler. The resonators are initialized with randomly generated noise, and the issue beneath examine is programmed into the couplings. After the system reaches equilibrium, the resonator items are learn out to acquire the answer.

“In a traditional chip, all the pieces may be very extremely managed,” says Gavin Crooks, a employees analysis scientist at Regular Computing. “Take your foot off the management little bit, and the factor will naturally begin behaving extra stochastically.”

Though this was a profitable proof-of-concept, the Regular Computing crew acknowledges that this prototype shouldn’t be scalable. However they’ve amended their design, eliminating tricky-to-scale inductors. They now plan to create their subsequent design in silico, relatively than on a printed circuit board, and anticipate their subsequent chip to return out later this 12 months.

How far this expertise will be scaled stays to be seen. The design is CMOS-compatible, however there’s a lot to be labored out earlier than it may be used to unravel large-scale real-world issues. “It’s wonderful what they’ve accomplished,” Bozchalui of Ludwig Computing says. “However on the identical time, there’s a lot to be labored to essentially take it from what’s as we speak to industrial product to one thing that can be utilized on the scale.”

A Completely different Imaginative and prescient

Though probabilistic computing and thermodynamic computing are basically the identical paradigm, there’s a cultural distinction. The businesses and researchers engaged on probabilistic computing virtually solely hint their tutorial roots to the group of Supryo Datta at Purdue College. The three cofounders of Regular Computing, nonetheless, don’t have any ties to Purdue and are available from backgrounds in quantum computing.

This ends in the Regular Computing cofounders having a barely totally different imaginative and prescient. They think about a world the place totally different sorts of physics are utilized for their very own computing {hardware}, and each drawback that wants fixing is matched with probably the most optimum {hardware} implementation.

“We coined this time period physics-based ASICs,” Regular Computing’s Belateche says, referring to application-specific built-in circuits. Of their imaginative and prescient, a future pc could have entry to traditional CPUs and GPUs, but in addition a quantum computing chip, a thermodynamic computing chip, and some other paradigm individuals would possibly dream up. And every computation will probably be despatched to an ASIC that makes use of the physics that’s most applicable for the issue at hand.

From Your Web site Articles

Associated Articles Across the Net