Fiber-optic cables are creeping nearer to processors in high-performance computer systems, changing copper connections with glass. Know-how corporations hope to hurry up AI and decrease its power value by transferring optical connections from exterior the server onto the motherboard after which having them sidle up alongside the processor. Now tech corporations are poised to go even additional within the quest to multiply the processor’s potential—by slipping the connections beneath it.

That’s the method taken by

Lightmatter, which claims to guide the pack with an interposer configured to make light-speed connections, not simply from processor to processor but additionally between components of the processor. The expertise’s proponents declare it has the potential to lower the quantity of energy utilized in complicated computing considerably, a vital requirement for right now’s AI expertise to progress.

Lightmatter’s improvements have attracted

the eye of traders, who’ve seen sufficient potential within the expertise to lift US $850 million for the corporate, launching it effectively forward of its rivals to a multi-unicorn valuation of $4.4 billion. Now Lightmatter is poised to get its expertise, referred to as Passage, operating. The corporate plans to have the manufacturing model of the expertise put in and operating in lead-customer programs by the top of 2025.

Passage, an optical interconnect system, might be an important step to growing computation speeds of high-performance processors past the bounds of Moore’s Regulation. The expertise heralds a future the place separate processors can pool their sources and work in synchrony on the massive computations required by synthetic intelligence, in keeping with CEO Nick Harris.

“Progress in computing any further goes to come back from linking a number of chips collectively,” he says.

An Optical Interposer

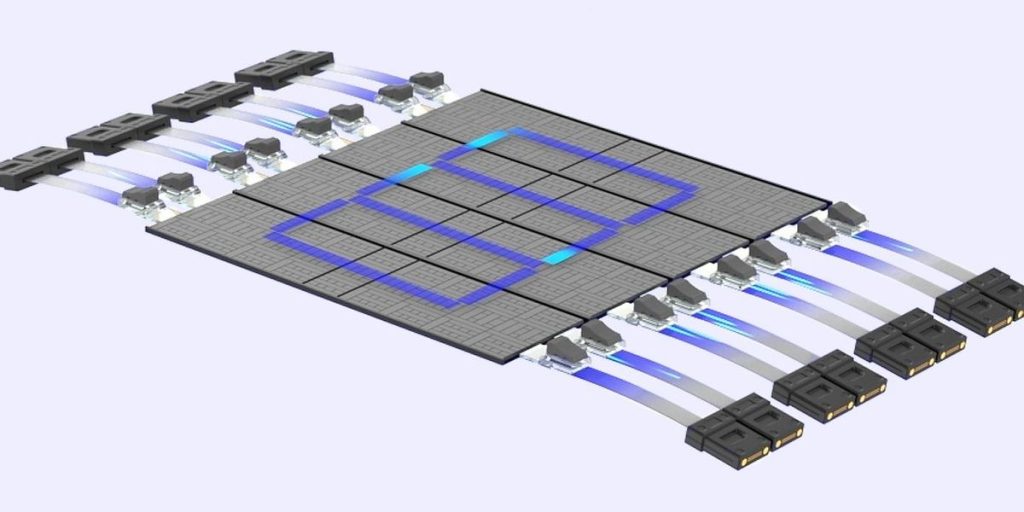

Essentially, Passage is an interposer, a slice of glass or silicon upon which smaller silicon dies, usually referred to as chiplets, are connected and interconnected throughout the similar bundle. Many high server CPUs and GPUs as of late are composed of a number of silicon dies on interposers. The scheme permits designers to attach dies made with completely different manufacturing applied sciences and to extend the quantity of processing and reminiscence past what’s doable with a single chip.

At the moment, the interconnects that hyperlink chiplets on interposers are strictly electrical. They’re high-speed and low-energy hyperlinks in contrast with, say, these on a motherboard. However they will’t evaluate with the impedance-free stream of photons by way of glass fibers.

Passage is lower from a 300-millimeter wafer of silicon containing a skinny layer of silicon dioxide slightly below the floor. A multiband, exterior laser chip gives the sunshine Passage makes use of. The interposer comprises expertise that may obtain an electrical sign from a chip’s commonplace I/O system, referred to as a serializer/deserializer, or SerDes. As such, Passage is appropriate with out-of-the-box silicon processor chips and requires no elementary design adjustments to the chip.

Computing chiplets are stacked atop the optical interposer. Lightmatter

From the SerDes, the sign travels to a set of transceivers referred to as

microring resonators, which encode bits onto laser gentle in numerous wavelengths. Subsequent, a multiplexer combines the sunshine wavelengths collectively onto an optical circuit, the place the information is routed by interferometers and extra ring resonators.

From the

optical circuit, the information could be despatched off the processor by way of one of many eight fiber arrays that line the alternative sides of the chip bundle. Or the information could be routed again up into one other chip in the identical processor. At both vacation spot, the method is run in reverse, wherein the sunshine is demultiplexed and translated again into electrical energy, utilizing a photodetector and a transimpedance amplifier.

Passage can allow a knowledge heart to make use of between one-sixth and one-twentieth as a lotpower, Harris claims.

The direct connection between any chiplet in a processor removes latency and saves power in contrast with the everyday electrical association, which is usually restricted to what’s across the perimeter of a die.

That’s the place Passage diverges from different entrants within the race to hyperlink processors with gentle. Lightmatter’s rivals, similar to

Ayar Labs and Avicena, produce optical I/O chiplets designed to take a seat within the restricted area beside the processor’s primary die. Harris calls this method the “technology 2.5” of optical interconnects, a step above the interconnects located exterior the processor bundle on the motherboard.

Benefits of Optics

Some great benefits of photonic interconnects come from eradicating limitations inherent to electrical energy, which expends extra power the farther it should transfer information.

Photonic interconnect startups are constructed on the premise that these limitations should fall to ensure that future programs to satisfy the approaching computational calls for of synthetic intelligence. Many processors throughout a knowledge heart might want to work on a process concurrently, Harris says. However transferring information between them over a number of meters with electrical energy could be “bodily inconceivable,” he provides, and in addition mind-bogglingly costly.

“The facility necessities are getting too excessive for what information facilities have been constructed for,” Harris continues. Passage can allow a knowledge heart to make use of between one-sixth and one-twentieth as a lot power, with effectivity growing as the scale of the information heart grows, he claims. Nonetheless, the power financial savings that

photonic interconnects make doable received’t result in information facilities utilizing much less energy general, he says. As an alternative of scaling again power use, they’re extra more likely to devour the identical quantity of energy, solely on more-demanding duties.

AI Drives Optical Interconnects

Lightmatter’s coffers grew in October with a $400 million Sequence D fundraising spherical. The funding in optimized processor networking is a part of a pattern that has turn into “inevitable,” says

James Sanders, an analyst at TechInsights.

In 2023, 10 % of servers shipped have been accelerated, which means they comprise CPUs paired with GPUs or different AI-accelerating ICs. These accelerators are the identical as people who Passage is designed to pair with. By 2029, TechInsights tasks, a 3rd of servers shipped can be accelerated. The cash being poured into photonic interconnects is a guess that they’re the accelerant wanted to revenue from AI.

From Your Website Articles

Associated Articles Across the Net